Exploring AWS IoT Analytics

I've had my eye on IoT space for a while (motto: "IoT: the 'S' is for 'Security'") because it's a really cool intersection between embedded (i.e. blinking lights) and cloud. But where to start?

You may have heard of the "Internet of Things" (IoT), which can call to mind "smart light bulbs" or "smart electrical outlets" or "smart doorbells". Usually when consumers talk about IoT they talk about Home Automation, which is only a single application of IoT. The patterns can be used for massive problems, like Smart Grid applications that try to minimize energy usage and cost for a large area, or large-scale agricultural applications where all manner of soil, weather, and machine data are collected and analyzed to maximize yields for a season.

IoT at scale consists of myriad "edge devices" (light bulbs, door bells, soil analyzers, tractors/harvesters), usually with a collection of sensors, which send data to the cloud in large quantities. This data is then analyzed, often using machine learning algorithms, to aid in the maximization or minimization of certain outcomes.

I've had my eye on IoT space for a while (motto: "IoT: the 'S' is for 'Security'") because it's a really cool intersection between embedded (i.e. blinking lights) and cloud. But where to start?

Clarify the goals

We decided to invest in an internal learning initiative, for which we would spend Actual Money and Time to learn. But IoT encompasses a lot of different disciplines, and we don't want to invest a lot of effort learning something less relevant to the market (or in something we already know), so we felt it super important to be extremely clear in the goals we set.

For example, we have already demonstrated capabilities with regard to Software-as-a-Service (SaaS), Infrastructure-as-a-Service (IaaS), and Platform-as-a-Service (PaaS) offerings such as AWS and Azure, so we have security, deployment, provisioning, architecture, etc. already covered. It probably wouldn't be smart to invest too much in those areas.

Additionally we invested in a similar learning initiative a few months ago where the goals were around demonstrating machine learning capability. Given that initiative and the capabilities demonstrated we didn't feel the need to generate insights from vast quantities of "real world" data - simply ingesting and storing fake data would do nicely.

Removing those two goals from consideration crystalized what we actually wanted to demonstrate with this learning initiative:

How do we demonstrate effectively gathering, storing, and presenting data for analysis from edge devices into a cloud offering?

Pick a domain

Once we decided on a goal statement it was time to look at domains of application. If you look at the Wikipedia page for Internet of Things you can see that IoT patterns are applied to the following domains:

- Smart home

- Elder care

- Medical and healthcare

- Transportation

- V2X communications

- Building and home automation

- Manufacturing

- Agriculture

- Metropolitan scale deployments

- Energy management

- Environmental monitoring

Many of these were simply impossible from a resource or knowledge perspective. For example, all medical domains were excluded because none of us are medical professionals, although I personally have watched nearly 6 seasons of "House, MD". The Agricultural and Manufacturing domains were excluded because we don't have land, tractors, or factories at our disposal.

I spent some time working in HVAC in my 20's so I chose "something to do with furnaces" in the Smart Home domain.

Constructing a test harness

Now that we have a domain and some goals, how do we decide what data to gather? And how to gather it?

Well, first let me state that I am not interested in shocking or burning myself with any regularity, so despite choosing an HVAC domain I wanted to avoid actual contact with a running furnace.

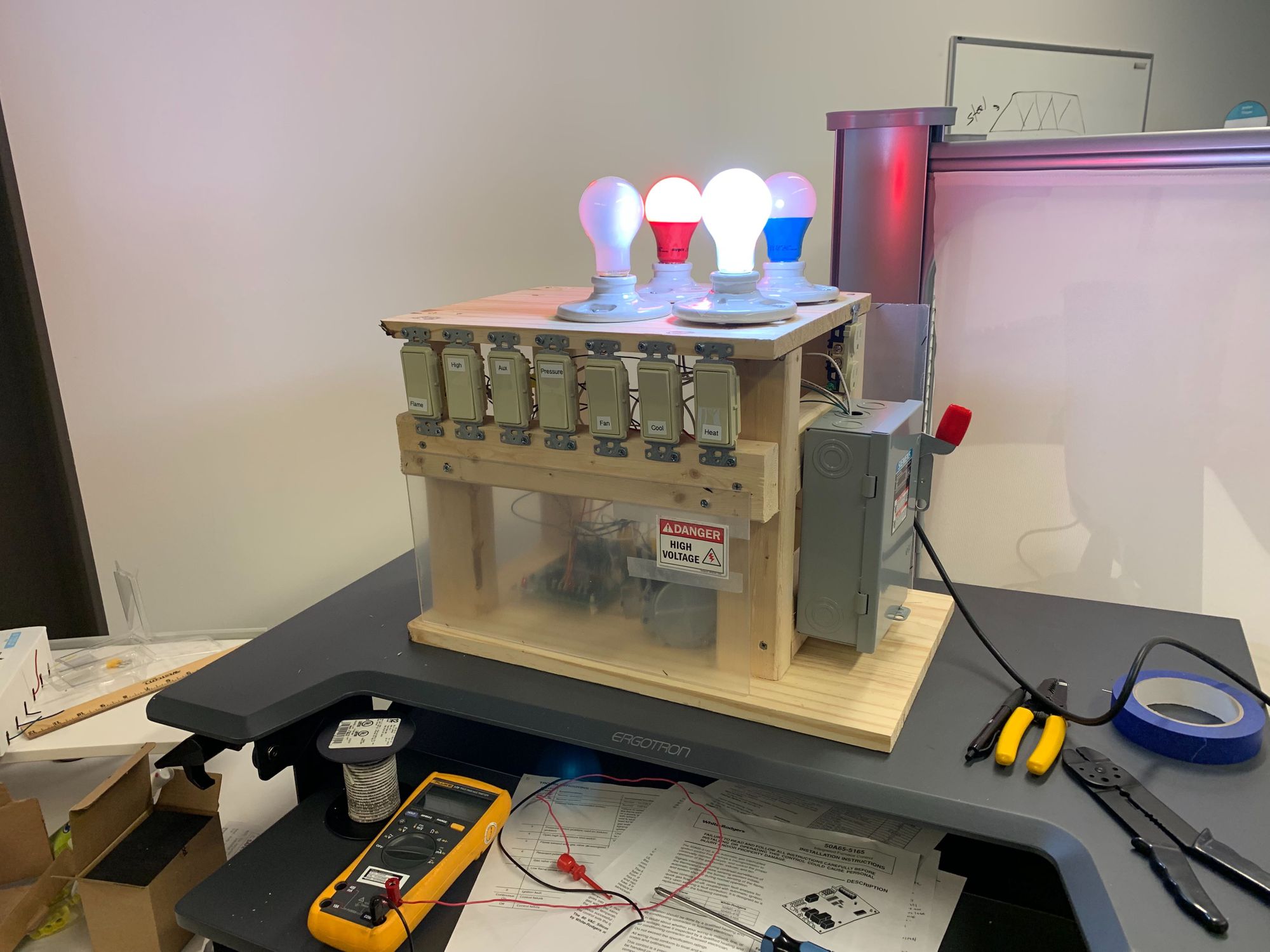

I requested a furnace control board (similar to the White-Rodgers 50A65-5165) and went to Lowes to buy a bunch of hardware. Instead of using a blower motor, an inducer motor, or a heating element, I used a number of light bulbs. Instead of a high limit switch, a pressure switch, or a flame sensor I used a number of light switches.

The Fake Furnace needed a real transformer so that the inputs and peripherals that expected 24V AC would get the correct type of voltage. Additionally I decided to request (and use) a real gas valve (disconnected from any actual gas source, of course) because I didn't want to have to understand the characteristics of one enough to fake it out.

The Fake Furnace can take the Real Control Board all the way through a standard heating cycle:

- Flip the switch for

heatwhich represents the thermostat calling for heat - After a second or two the

inducerlight bulb comes on. The control board has turned on the inducer motor, wanting to clear the exhaust line and bring fresh air in - Since the inducer motor is running the control board expects the pressure sensor to trigger, so we flip the

pressureswitch - The control board activates the ignitor circuit for 15 seconds to prepare to light the burner

- At the end of the 15 second ignitor window, the control board opens the gas valve and expects to sense a flame signal within 4 seconds. We flip the

flameswitch - The control board senses the flame and shuts off power to the ignitor. The board lets the heat exchanger warm up for 45 seconds and then it kicks on the blower motor, so the lightbulb labeled

heatturns on

When we're done heating, we follow these steps:

- Turn off the

heatswitch - The gas valve will immediately close and the control board will want to sense a lack of flame pretty quickly, so we turn off the

flameswitch - The inducer motor will run for an additional five seconds, clearing the exhaust line. When the control board shuts off the inducer, it will expect a drop in pressure, so we turn off the

pressureswitch - The heat blower runs for an additional 60 seconds to clear excess heat out of the exchanger, after which the

heatbulb shuts off

From the perspective of the control board everything is operating as it should. The board has no idea that most of its peripherals are fake; it operates as though it were in a real furnace.

Selecting data to push to the cloud

Now that we can gather data, which data should we gather? The tricky part of picking a data source is finding data that's meaningful enough. We don't want to use any "sensor" data because the sensors are light switches. Similarly, any current draw we might want to measure from things like fans are also fake.

The chosen control board has an adaptive algorithm for how much power it sends to the heating element. The board starts with about 50% power and lowers the amount of power every heating cycle until the burner fails to ignite, then it backs off to a power level that, in the recent past, produced a flame. That adaptive algorithm seemed like a good place to start on data acquisition, since the data comes from the only real component in the system.

We used a Feather HUZZAH32 from AdaFruit running an application written with Mongoose OS to ship the data up to AWS IoT Core. The data itself was taken from a Hall-effect current sensor from SparkFun. The ESP32 was programmed to calculate the duty cycle of the AC voltage sent to the heating element and report that to the cloud every second.

Said more simply, we started measuring how much power was sent to the ignitor and sent our measurements to the cloud.

Incidentally, the part from SparkFun that measures current seemed to have a hard time when the lightbulb that represented the ignitor was an LED bulb, because those things use very little current. In order to get an appreciable reading I had to purchase a good old fashioned incandescent bulb. I expect to get a sternly worded letter from Al Gore any day now.

Get data in the cloud

AWS IoT supports multiple ingestion patterns, but they all start with the edge device publishing an MQTT message on an IoT Topic.

In our case the ESP32 would publish a single MQTT message on a single IoT Topic every second. Here's an example message:

{

"timestamp": "2019-05-05T00:00:00.000Z",

"uid": "esp32_F2DEEE",

"dutyCycle": 0.45

}This message indicates that the ESP32 sensed that the ignitor lightbulb was provided power at 45% of its potential maximum. For times when the signal is idle, the dutyCycle field will be 0.

There are many sub-services within the AWS IoT offering. The primary two we looked at for this learning initiative were IoT Core and IoT Analytics. IoT Core is the service that defines all the edge devices (called Things), how they connect and transmit data (through Certificates and Topics), and what happens when a message is published (through Rules and Actions). I wrote an article a few years ago about all the bits and bobs that were eventually collected into the IoT Core offering.

For the purposes of this learning initiative we used the AWS IoT Analytics service to shortcut a lot of the infrastructural overhead needed to get a purely custom solution up and running. IoT Analytics allows you to create:

- A Channel that reads from an IoT Topic and pushes messages through a Pipeline

- A Pipeline that allows arbitrary operations to be performed on data. Maybe you want to fetch a weather report and augment your data packet with the current temperature, or maybe you want to calculate a value based on a message's fields and include that value in your message

- A Data Store that messages are stored to once the pipeline has finished processing

- A Data Set that represents a specific report a user might try to pull from the data collected thus far

We created a channel that read from the device's IoT Topic, a pipeline that stored the unedited message to a data store, and a data set that pulled the duty cycle information out of the data store.

IoT Analytics also allows you to retain the raw data indefinitely and re-run all packets through a new or updated pipeline, in case you have updated business rules and want your calculated/aggregated data to reflect those rules.

IoT Core's Topics can trigger Lambdas or other AWS services, so if you wanted to create a data product to handle the data ingestion that is absolutely doable.

Get data out of the cloud

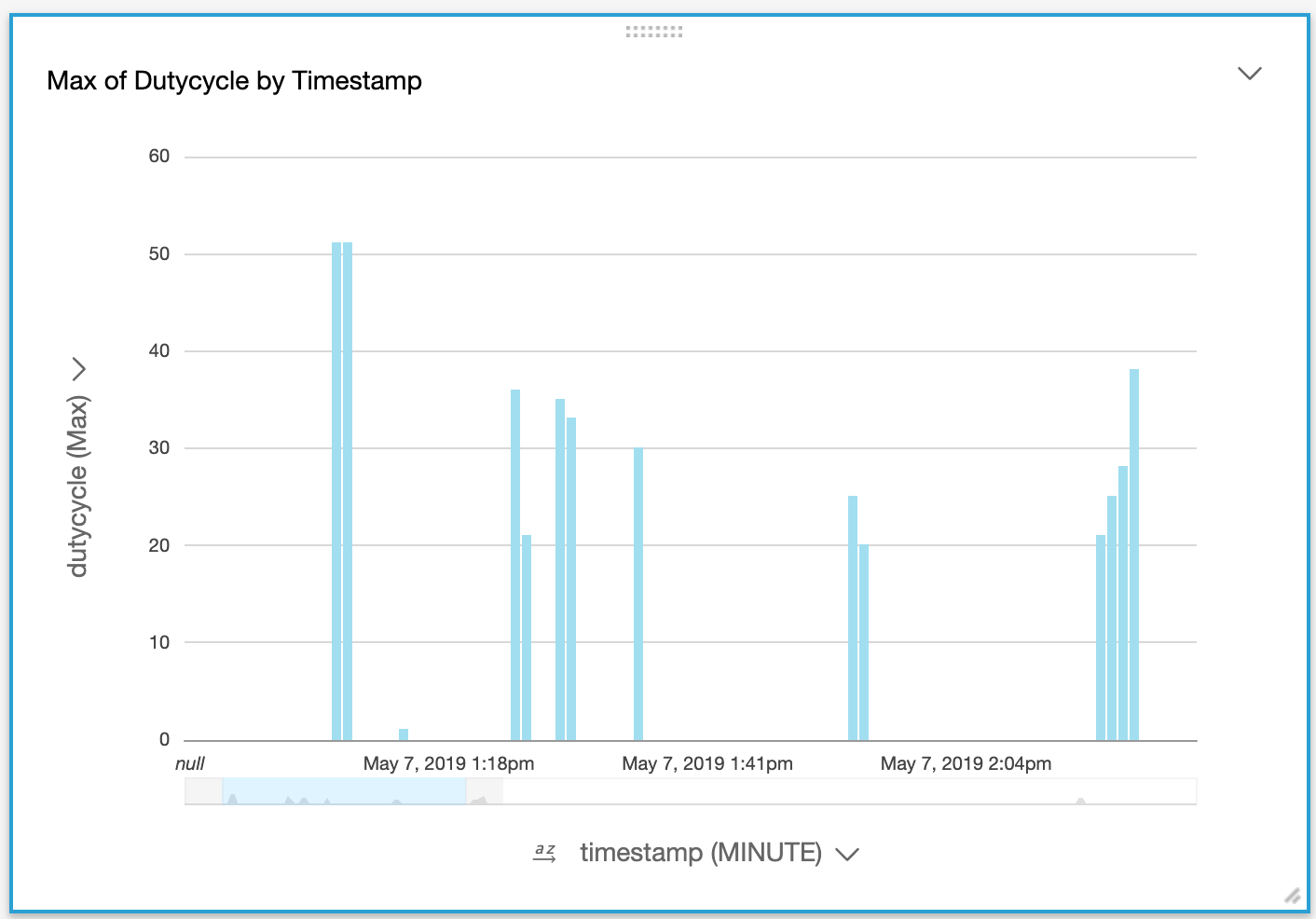

Once you have a data set you can plug it into AWS's QuickSight offering and get a pretty decent graphical representation of your data. I was able to generate this graph without any difficulty:

You can see the control board's adaptive algorithm for the ignitor at work in this graph. The first ignition sequence runs at about 50% and each subsequent cycle runs at a lower percentage. The last cycle I let the furnace think there was no flame, so the percentage went up again until I hit the flame switch.

If your data sets are large or you want to experiment with machine learning algorithms, IoT Analytics allows you to super easily export your data to a Jupyter notebook via the Sagemaker service.

I elected not to dive too deeply into this particular application because the previous learning initiative covered this area in depth. And also I'm not that good with Python.

What we learned

Let me break out the itemized list:

- If you don't want to spend a lot of time architecting a robust data product or managing the lifecycle of an IoT message, IoT Analytics can get you a pretty solid jumpstart.

- If you do want to create a custom, robust data product it will integrate pretty easily with IoT Core and IoT Analytics.

- Pulling a high-level report is super easy from the AWS console thanks to QuickSight.

- AWS SageMaker is a built-in Machine Learning sandbox for your IoT data.

- It's super fun to build stuff that could shock the absolute butts out of you. Incidentally, I blew a 15 Amp fuse when I connected an oscilloscope to a circuit incorrectly. Better than coffee.

What's next

From a product perspective we could analyze the phase angle between the voltage and current on the blower motor to determine how effectively it is running. If we collect decent enough data we might be able to predict a short in the motor, a bad start capacitor, or maybe even that the filter should be replaced.

Of course, to do that we would need to hook our system up to a real furnace, which would probably void the homeowner's insurance policy. So I won't tell if you don't.

From a learning perspective we need to spend some time with the Device Shadow (which is a RESTful interface that holds/updates the state of the device) and AWS IoT Jobs (which are documents that can be sent to IoT devices indicating software updates or tasks that need to be executed). Either could warrant a new custom test harness!

Either way, I am really enjoying these blinking lights.